Parallel-in-Time methods for Initial Value Problems

Working Groups: Lehrstuhl Computational Mathematics

Collaborators (MAT): Prof. Dr. Daniel Ruprecht, Dr. Sebastian Götschel, Dr. Thibaut Lunet

Collaborators (External): Martin Gander, Robert Speck, Jens Hahne

Description

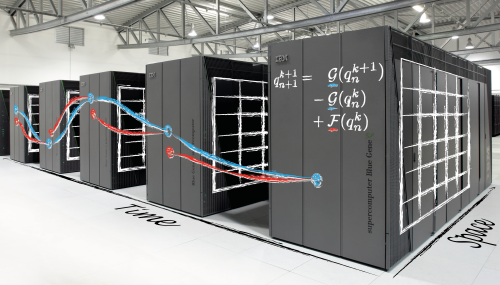

Recent advances in supercomputing architectures in the last decades have pushed the scientific community to develop more efficient algorithms for high performance computing (HPC). In particular, the switch from improving processor speed to increasing concurrency in HPC have motivated the development of new parallelization algorithms that can harness the computing power of massively parallel HPC architectures.

For two decades, there have been research efforts in developing parallel computing capabilities for time integration methods used the simulation of time dependent problems (Numerical Weather Prediction, Computational Fluid Dynamics, Fluid-Structure interactions, …). However, to develop such “Parallel-in-Time” algorithms (or PinT), one needs to deal with the sequential nature of time: it is necessary to know the past and the present before computing the future. Hence, computing past, present and future in parallel needs some fundamental changes in the classical computation paradigms used for time-dependent problems. To tackle this problems, new algorithms have been developped in the last two decades, see [Gander15] for an historical overview.

The superstar of parallel-in-time algorithms : Parareal

The idea of PinT method already dates from the 1960’s [Nievergelt64], but a renewed attention on those methods appears with the invention of the Parareal algorithm by Lions, Maday and Turinici [Lions01]. The main benefit of this algorithm is its simplicity of application and generality. Suppose you already have a system of (non-)linear Ordinary Differential Equations (ODE) of the form :

\[ \frac{d\textbf{q}}{dt} = \textbf{f}(\textbf{q},t), \quad t \in [0, T], \quad \textbf{q}(0) = \textbf{q}_0, \]

obtained, e.g from the space discretization of a Partial Differential Equation. Consider you solve it with a numerical method that computes a numerical solution at any time, starting from the initial value :

\[ \textbf{q}(t) = \underset{0 \rightarrow t}{\mathcal{F}}(\textbf{q}_0) \]

Then to apply Parareal, one just has to consider a few stages :

- Decompose the global time interval \([0, T]\) into \(N\) uniform sub-interval of size \(\Delta T\), noted \([T_n, T_{n+1}]\).

- Define a coarse approximation of your solver \(\mathcal{F}\), that should be cheaper to use, but produces a less accurate solution : \[ \textbf{q}_{approx}(t) = \underset{0 \rightarrow t}{\mathcal{G}}(\textbf{q}_0) \]

- Compute a coarse (cheap) numerical approximation \(\textbf{q}_n^0:=\textbf{q}_{approx}(T_n)\) on all the sub-intervals \[ \textbf{q}_{n+1}^0 = \mathcal{G}(\textbf{q}_n^0) \]

- Compute a fine solution with \(\mathcal{F}\) on each subinterval in parallel and correct the final solution on each subinterval using the coarse solver \(\mathcal{G}\) : \[ \textbf{q}_{n+1}^{k+1} = \mathcal{G}(\textbf{q}_n^{k+1}) - \mathcal{G}(\textbf{q}_n^{k}) + \mathcal{F}(\textbf{q}_n^k) \]

The last iteration is repeated until some convergence criterion is reached. Since the coarse solver \(\mathcal{G}\) is supposedly very cheap, then the most part of the iteration cost is represented by \(\mathcal{F}\). But since the later computation can be performed on each time sub-interval separately, we are then doing parallel-in-time computations.

Below is a short video on the application of Parareal to the Lorentz system, doing respectively one or two iterations, and showing the speedup brought by the Parareal algorithm, along with the approximation error that comes inevitably with it.

Analysis of Iterative PinT methods

The most important PinT algorithms are iterative methods, like Parareal, that can be used with many different parameters. For instance, using Parareal require to define a given sub-interval decomposition, and a coarse solver \(\mathcal{G}\). Those have a direct impact on the speed of convergence of the method, which will impact directly its potential parallel speedup (computation acceleration in comparison to a standard time-sequential computation).

Many other algorithms have been developed after Parareal, most of them are also iterative methods : they perform iteration with part of the work done in parallel, and expect to retrieve a solution with a sufficient accuracy faster than a standard time-sequential solver. To assert this property, one need

- Some error criterion that predict the number of required iterations

- Some speedup analysis that model the potential speedup for a given iteration

Combining both aspects allow to assess the applicability of PinT methods for given types of problem. Recently, a new analysis framework have been developped to describe and analyse the most important PinT algorithms under the same perspective, and produce generic error bounds [Gander22]. Current ongoing work is focussing on the extension of this framework to any iterative PinT algorithm, and the combination with a speedup modeling allowing to find the best algorithms and parameters for a given type of problems.

References

[Gander15] Gander, M. J. (2015). 50 years of time parallel time integration. In Multiple shooting and time domain decomposition methods (pp. 69-113). Springer, Cham.

[Nievergelt64] Nievergelt, J. (1964). Parallel methods for integrating ordinary differential equations. Communications of the ACM, 7(12), 731-733.

[Lions01] Lions, J. L., Maday, Y., & Turinici, G. (2001). Résolution d’EDP par un schéma en temps «pararéel». Comptes Rendus de l’Académie des Sciences-Series I-Mathematics, 332(7), 661-668.

[Gander22] Gander, M. J., Lunet, T., Ruprecht, D., & Speck, R. (2022). A unified analysis framework for iterative parallel-in-time algorithms.